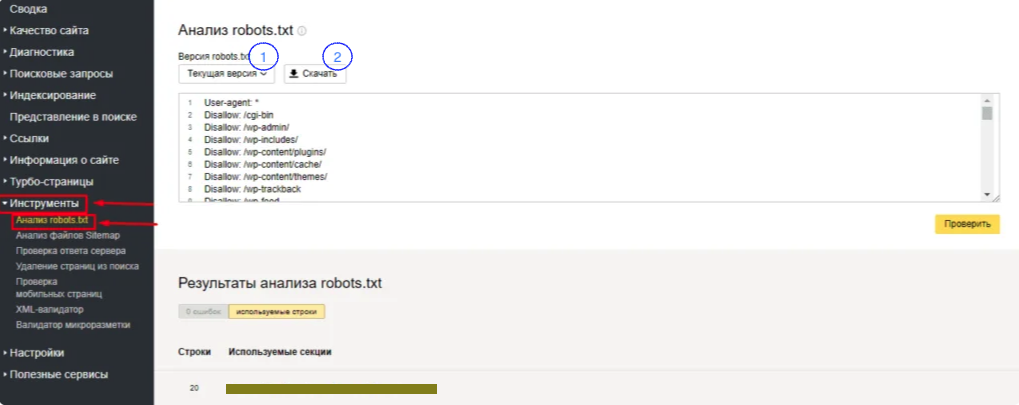

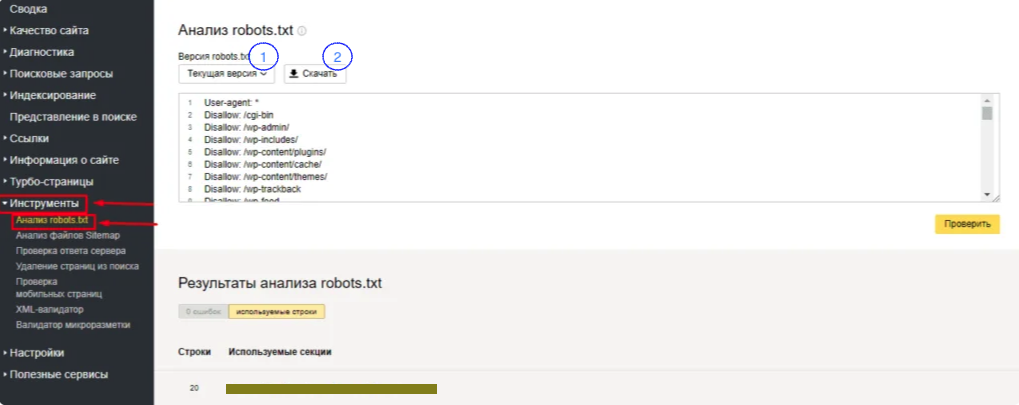

Much like Google Search Console, Yandex Webmaster contains a simple and useful way to validate and test URLs against your robots.txt file.

Yandex’s version also allows you to view historical versions of the file and its changelog (for troubleshooting and identifying issues if they’re not noted elsewhere).

From this screen, you can view historical versions from the drop-down (1), or download the file (2).

When validating as to whether or not URLs will be blocked or allowed by the website’s robots.txt file, there is a limit of 100 URLs per request.

The results are also straightforward, the red cross means blocked and the green checkmark means allowed.